Developing the ChavrutAI Chatbot, an AI Talmud Study Partner

Overview of initial work done on developing the work-in-progress AI-powered study assistant for Talmudic texts, with strict cost controls and security measures

When I first started working on ChavrutAI, my goal was simple: make Talmudic texts more accessible through modern technology. The live app already contains the core features: bilingual Hebrew/English display, navigation, and a nice amount of display customization features.1

The next step is building the AI chatbot. I cloned the current web app; the prototype for the web app with the chatbot is here.

Outline

Design Principles: Focus, Security, and Cost Control

1. Contextual Focus

2. Security Boundaries

3. Cost Management

The Technical Implementation

Backend Architecture

Frontend Integration

The Development Timeline

Core Infrastructure

Context Awareness

Text Processing Pipeline

Current Features and Capabilities

What Works Today

Next Steps and Future Development

Priority 1: Fix Text Pipeline

Design Principles: Focus, Security, and Cost Control

The core challenge is: How do I create an AI that stays laser-focused on the current page of text while providing meaningful educational assistance, all while keeping costs under control?

Before writing any code, I established three non-negotiable principles:

1. Contextual Focus

The AI must only discuss the current Talmud page being studied. No general knowledge questions, no discussions about other texts, no off-topic conversations. If a user asks about a different page, the AI should redirect them to navigate there first.

2. Security Boundaries

I needed multiple layers of protection against "jailbreaking"—attempts to trick the AI into ignoring its constraints. This included system prompt engineering, input sanitization, and response validation.

3. Cost Management

OpenAI's GPT-4o is powerful but expensive.2 I implemented strict controls: 50-word maximum responses, turn limits per session, daily usage caps, and real-time budget monitoring.

The Technical Implementation

Backend Architecture

The heart of the system handles GPT-4o integration with custom system prompts. I spent time crafting prompts that enforce topic boundaries while maintaining educational value.

The service also uses the processed text that users see in the interface.3

Frontend Integration

The user interface needed to be non-intrusive while remaining easily accessible. I implemented:

Chat Bubble Component

A floating action button positioned at the bottom center of the screen, carefully placed to avoid conflicts with existing navigation elements.

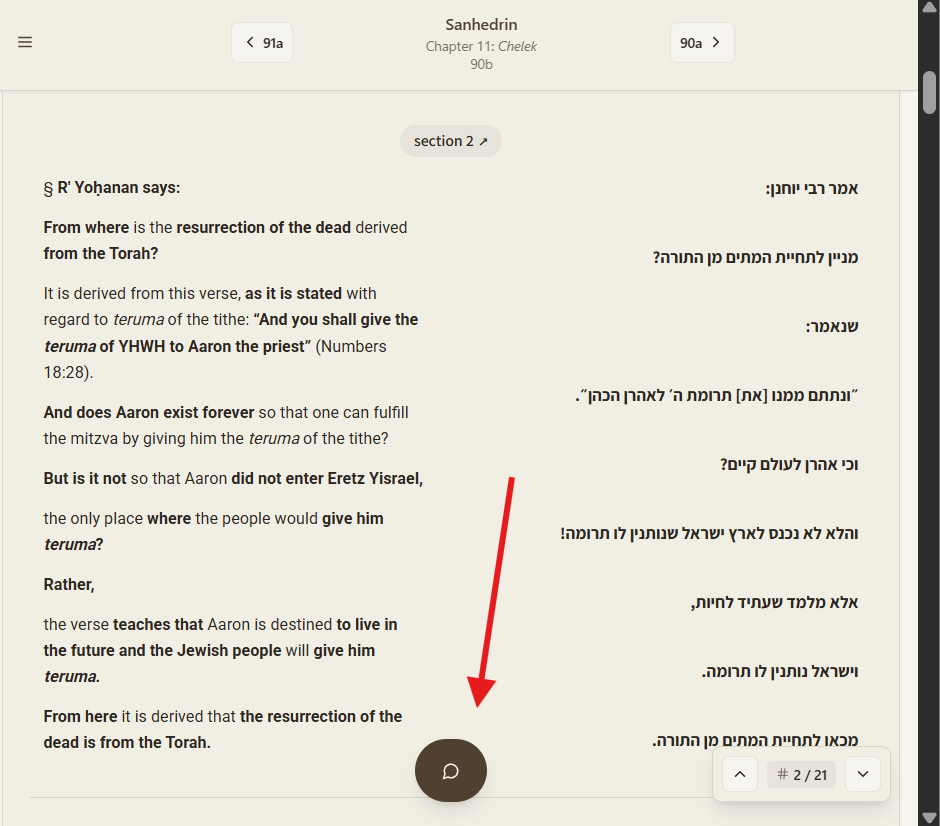

Screenshot (in tablet view): Chat bubble positioned on the page, bottom center (arrow added to the screenshot, to highlight)

Chat Modal Interface

When activated, the bubble expands into a full chat interface4 with conversation history, turn counter, and usage statistics.

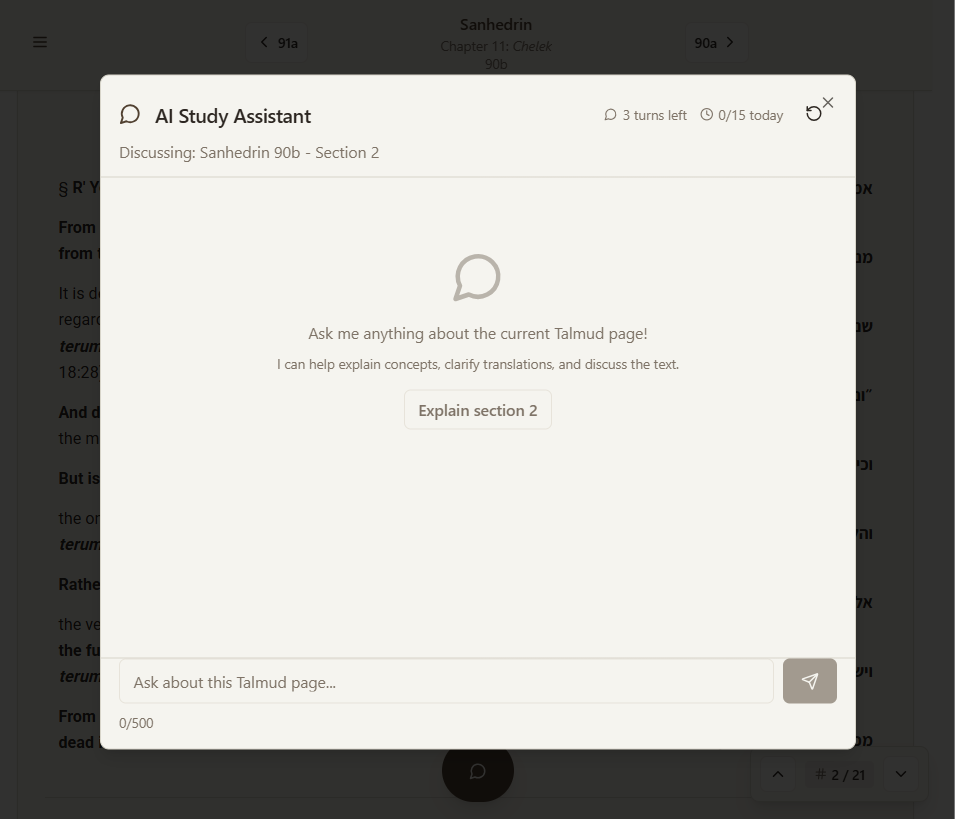

Screenshot: Expanded chat modal, appearance when opened:

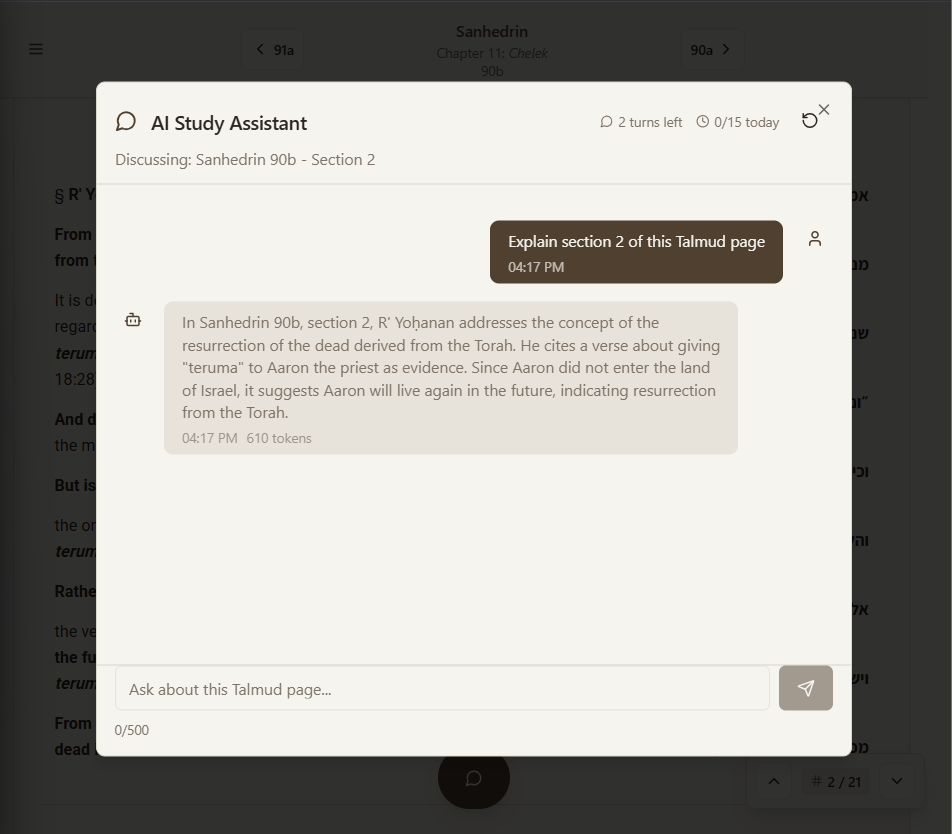

Screenshot: Expanded chat modal, appearance after prompted, with response:

Smart Context Integration

The chat system automatically knows which tractate, folio, and exact section the user is currently viewing. This context gets injected into every AI interaction.

The Development Timeline

Core Infrastructure

I started with the essential building blocks: OpenAI integration, basic API endpoints, and simple chat UI components.

Context Awareness

This phase focused on making the AI aware of the current page content. I implemented section-specific targeting: users can ask about particular sections within a folio, and the AI responds with precise contextual awareness.

Text Processing Pipeline

A challenging aspect is ensuring the AI received the same processed text that users see. ChavrutAI applies sophisticated text processing,5 and formatting normalization. Getting this pipeline working correctly took significant debugging.

Current Features and Capabilities

What Works Today

The chatbot is functional, with basic capabilities, functioning as an early, alpha-stage proof-of-concept:

Section-Aware Responses

Ask "What does section 3 discuss?" and the AI responds specifically about that content, not the entire page.

Cost Controls in Action

50-word response limit keeps costs manageable

3 turns per session prevent runaway conversations

15 daily turns provide meaningful assistance without breaking budgets

Real-time token counting and cost monitoring

Security That Actually Works

Try asking about politics or other topics; the AI politely redirects users back to the current Talmud content.

Next Steps and Future Development

Priority 1: Fix Text Pipeline

The current issue I'm working to resolve: the LLM getting the exact text that is displayed to the user.

After that, I’ll continue to fine-tune both the interface, as well as the underlying prompt.

Watch this space!

See my recent piece on the launch: “ChavrutAI Talmud Web App Launch: Review and Comparison with Similar Platforms”.

Notably, recently, the successor GPT-5 came out; I’ve been testing it extensively as well; for my purposes, it’s at most a relatively small incremental improvement, if at all an improvement, which is currently still unclear

E.g., processed Hebrew text - removing nikud for consistency - and English term replacements.

Currently it opens in a full modal window that blocks the rest of the page; I plan to make it less intrusive.

E.g.,: Hebrew nikud removal, English term replacements ("Lord" → "YHWH", "Rabbi" → "R'").