Part One of My Collected Blog Posts Uploaded, and Transforming and Formatting My Blog Archive Using Python

I'm happy to announce the uploading of Part 1 of my collected blogposts to my Academia.edu page, titled "Talmud and Tech: Collected Blogposts."

I’m also attaching it here:

This first installment is a substantial 280+ pages. It's the first part of a planned 4-part series totaling 1200 pages.

This collection is a curated selection of pieces from my blog, with many stylistic improvements and adjustments.

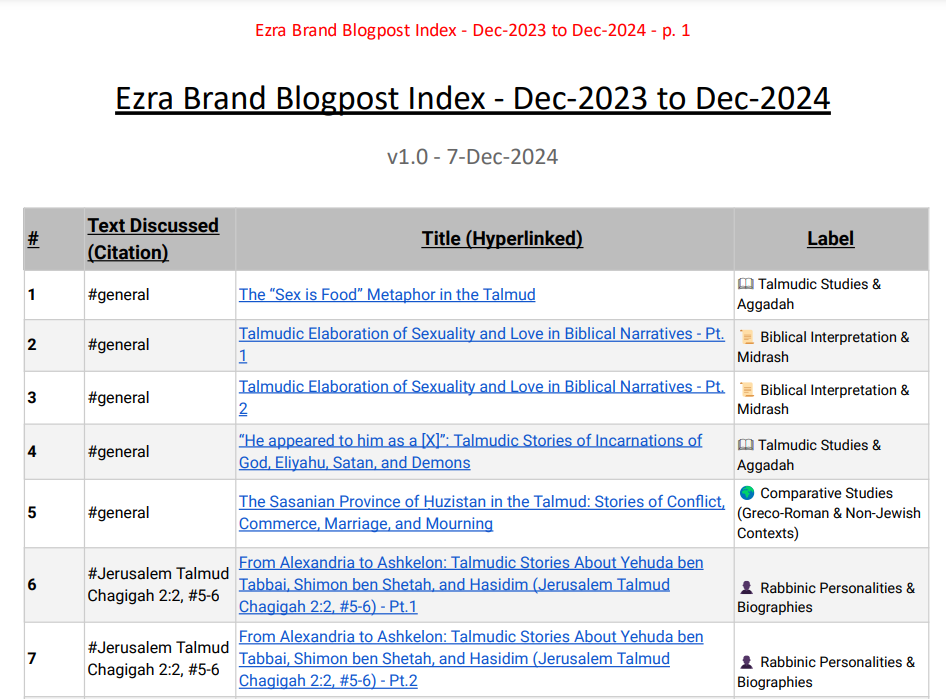

I've also taken the opportunity to update my blog index, which now covers December 2023 to December 2024. For those following my work, the index offers an organized overview of the posts and essays. Check it out on my blog index page here, section “Index of Talmud Content”, and at my Academia.edu page: “Personal Blog Index: December 2023 to December 2024”.1

I’m also attaching it here:

Here’s a screenshot of the beginning of it (I worked hard on making it as readable as possible):

I look forward to continuing to share with you all.

In this piece, I also want to go into the technical aspects of using scripting to automate as much of the process as possible, which will be the focus of the remainder of this discussion.

Transforming and Formatting My Blog Archive Using Python: A Case Study

Here was the challenge: I had 200+ blog posts stored as individual HTML files, each with a title stored in a separate CSV file. This is how the platform I use--Substack--exports the blogposts.

Screenshots:

Admin tools, button to export:

Substack exports the posts as HTML files, one per post, in a folder called ‘posts’. Screenshot of the beginning of the list of posts, sorted by highest ID to lowest, as seen in Windows File Explorer:

The ‘Post ID’ column, in the CSV file which is also part of the general Substack export, as displayed in a Google Sheet:

The posts all contain Hebrew text, requiring special formatting to display correctly. My goal was to:

Combine the posts into a single document for easier repurposing.

Add the titles as headers to each post.

Format Hebrew paragraphs to display right-to-left (RTL) for proper formatting.

How It Worked

Step 1: Matching Files with Titles

The first step was creating a CSV file that listed post IDs and titles (using columns from a CSV file that Substack makes available for export), then uploading and matching those IDs to the corresponding HTML files stored in a ZIP archive. Using Python’s pandas library, I created a mapping between file names and their titles. Then, I used zipfile to extract and read only the files that matched the IDs.

Here’s the core logic:

df['html_filename'] = 'posts/' + df.iloc[:, 0].astype(str) + '.html'

header_dict = dict(zip(df['html_filename'], headers))This snippet ensured I had an efficient dictionary mapping file names to titles.

Step 2: Combining HTML Files and Adding Titles

Once the files were matched, I looped through each one, adding its title as an <h1> tag above the content.

file_content = f"<h1>{header}</h1>\n" + f.read().decode('utf-8')

combined_data.append(file_content)The processed content from each file was then concatenated into a single HTML document, saved as combined_result.html.

Screenshot of the beginning of the HTML file, at this stage, as displayed in Google Chrome, at 110% zoom (notice that the Hebrew is currently left-to-right, which the next step will fix):

Step 3: Formatting Hebrew Text as Right-to-Left

Nearly all of my posts include full paragraphs of Hebrew text (mostly from rabbinic sources). Ideally, these paragraphs should be formatted as right-to-left (unfortunately, Substack does not offer that option on its platform).

Using the BeautifulSoup library, I scanned each paragraph and checked whether the majority of characters were Hebrew. To be more specific: The code checks whether over 50% of the paragraph’s text is Hebrew, based on checking the Unicode range of the characters (the Unicode range of Hebrew characters is [\u0590-\u05FF].)2

For those paragraphs, dir="rtl" attribute is added:

def is_majority_hebrew(text):

"""Check if the majority of characters in the text are Hebrew."""

hebrew_chars = re.findall(r'[\u0590-\u05FF]', text)

return len(hebrew_chars) > len(text) / 2

def set_rtl_for_hebrew_paragraphs(html_content):

"""Modify paragraphs with majority Hebrew text to be RTL."""

soup = BeautifulSoup(html_content, 'html.parser')

paragraphs = soup.find_all('p') # Find all <p> tags

for paragraph in paragraphs:

if is_majority_hebrew(paragraph.get_text(strip=True)):

# Set direction to RTL

paragraph['dir'] = 'rtl'This relatively simple logic ensured that Hebrew paragraphs displayed correctly; however, some post-processing manual adjustments were still necessary.

Step 4: Saving the Updated File

The final combined and formatted document was saved as updated_example.html, ready for review or further processing. With everything automated, the process could handle hundreds of posts with minimal effort.

Step 5: Manual Post-Processing

I then opened the final HTML file and opened it in Chrome.

Screenshot of the beginning of the final HTML file, as displayed in Google Chrome, at 120% zoom (notice the now-correct right-to-left Hebrew):

I then copy-pasted all of it into a Google Doc. All the formatting was thereby retained. I manually added Table of Contents, removed “Part 1” and Part 2” titles (no longer relevant, since not being serialized), added page numbers and other headers, and many other additional improvements.

Results

In a single Python script, I managed to:

Combine 50 blog posts into a single document (a manageable batch size).

Add titles as headers for better organization.

Ensure Hebrew text displayed correctly with RTL formatting.

This streamlined workflow saved hours of manual formatting and gave us a reusable system for future updates. Plus, it’s scalable—I’ll be able to extend it to process all 200 posts, totaling about 1,500 pages, with just a few tweaks.

Why This Matters

Automation like this highlights the power of combining basic tools—Python, pandas, zipfile, and BeautifulSoup—to handle repetitive, detail-oriented tasks. For anyone managing large archives of content, these techniques can transform what feels like a mountain into a smooth, efficient process.

If you’re facing a similar challenge or just want to get more comfortable automating workflows, consider starting small. A little Python can go a long way.

The PDF preview on the Academia.edu page is currently unavailable, possibly due to complex formatting, such as the inclusion of icons. I may need to resolve this issue later. However, the PDF is still available for download from there without any issues.